Are we entering a world of societies “whose members spend a great part of their time, not on the spot, not here and now and in the calculable future, but somewhere else, in the irrelevant other worlds of…mythology and metaphysical fantasy” as Aldous Huxley posited in 1932 in Brave New World. This was worried about in an article by Matthew Syed in the Sunday Times of 12th February headlined “We’ve never had so much information at our fingertips – and so little wisdom to do anything useful with it.”

In a world of excess and confusion, concentration on balance and identifying important truths is a route to sanity to be treasured. Nowhere is that excess and confusion more in our faces than in the universe of information overload. Three old fashioned sayings come to mind “Don’t believe everything you read in the newspapers”, “Seeing the wood from the trees”, and “What I tell you three times is true”. The Search for Truth in the media is also discussed in these pages in an article of 15 November 2022.

ARTIFICIAL INTELLIGENCE, WONDROUS OPPORTUNITY BUT MORE CONFUSION

ChatGPT and similar generative AI systems are being developed at a rate as ever faster than the average person can keep pace with it. They represent a new wave of information generators in what is already an over crowded ocean of broadcasters, inventors, influencers, let alone national agencies, analysing, editing and distributing data, information, opinion, and propaganda. On a user’s request, ChatGPT, launched last November, is capable of producing very quickly a summary or a treatise on what is requested, whatever the topic, as well as legal and other documents, even poetry of sorts, in different languages and styles as demanded by the user. It applies natural language processing and so the precise words of a request are determinative of the answer but also answers to the same question can change daily. And its products are presented in a way that make them appear credible, and the appearance of credibility can easily be mistaken for accuracy, completeness and reasonableness. As with so much social media information, let alone that on TV or in journals, the average person who is not an expert on the topic in question might easily believe the product is of good quality and accuracy: of course it may be, but equally it might be low grade, wrong, misleading and quite useless to rely on. How will the user know without some verification support?

The systems remain early stage , but their potential is astonishing in the knowledge universe, as is the potential of AI for good in so many areas such as medicine. ChatGPT’s reliability and quality today is openly questioned and its promoters rightly emphasise its weaknesses, but accept the challenge to iron them out. Its extraordinary “skill” is to “sweep” the internet for information on a topic, and then , having sorted out its weight, to assemble what it deems appropriate in a way to satisfy the user’s request. This is in addition to natural language processing. Of course, the information it acquires in this way can itself be wrong, deliberately falsified, incomplete and manipulative as so much on the internet is, and that gets combined with the information on the internet, which is good quality, and out pops an egg. No source identified and looking credible, even if full of error or omitting key pieces.

This technology will develop quickly: perhaps in the hands of people who want to benefit mankind in some cases, but also in the hands of people who are malevolent with a motivation that may include political and social harm and perhaps solely to make money. Distinguishing good from bad information is the big challenge facing all regulators and users. Applying integrity to products is possibly a reducing feature. Balancing controls of media bad things against freedoms of speech and otherwise is delicate. The internet itself, and communication tools like email, grew exponentially once off the ground. There were no roadmaps as to how to use them sensibly or just for social good, and users got benefits as they went along, trial and error. But of course, the criminal or immoral have been able to abuse these facilities and their users. That pornography and so misogynistic attitudes are legion is proving socially disastrous. There perhaps must be misgivings over the extent to which the new generative AI products can be harnessed for good. Also, is it the case that, as with social media platforms, the real driving force behind development of generative AI products is to simply make money? That driving force posing an Existential Threat is discussed in these pages in an article in January 2020.

DISCERNING TRUTH AND THE WOOD FROM THE TREES

As was aired in The Search for Truth, it is nigh impossible to discern true facts from misinformation, and so draw reasonable conclusions especially where the information is from sources unknown or is from a publisher with an agenda which dominates their approach, whether a political bias or a “cause” which they promote. People must find sources they reasonably trust and, if a topic is important, triangulate with other sources to test veracity and accuracy and completeness. Not trusting everything we read in newspapers, for which include social media and other publishing platforms, remains a necessary cynicism to preserve integrity of truth. Influencers who their followers instinctively worship are to be treated even more cynically: celebrities who expound judgement over major world issues, or even matters such as diet, while having done barely any research, or who may be burdened by severe bias, or even may have a financial interest in the promotion they support, are, without caution, dangerous to truth. Then the so called “progressives” who would rewrite history to reflect their judgement of behaviours all undermine fact-based consideration. Rewriting Roald Dahl books based on subjective fantasies is a classic exercise in distortion, and fortunately generated an outraged backlash. Unfortunately, these groups are vocal and active, unlike most of the silent but sensible majority, and perhaps cause more disruption than their weight justifies. To beware the repetition of misinformation is essential.

The more a cause is pleaded, perhaps the less its validity. Perhaps too, beware of “what I tell you three times is true” when it is false: throughout the pandemic and since there have been numerous campaigns of repetition of gossip, slur and allegation as if fact. This strategy of promulgation of the same story, albeit incomplete and misleading, without evidence, over and over again is perhaps more and more common. Add too those billions of people with “devices” who are receiving from their Facebook, TikTok and other platforms news and Adverts and information tailored for them, what they want to hear. And, some Western authorities say, TikTok for example carries a risk of manipulation and data collection by the Chinese Communist Party due to its Chinese background. Whatever the truth, the confusion alone is boggling.

Discerning truth is problematic enough. But the sheer volume of noise emanating from every publication corner swamps people’s capacity to take in what is important and see the wood for the trees. Apparently in 2022 there were roughly 500 million tweets a day. It is said there are 100 billion WhatsApp messages generated each day. There are between 3 and 4 million podcasts available. There are hundreds of thousands, or millions, of sources of information. Matthew Syed’s article further reflected the concern of Aldous Huxley in “Brave New World” that “we would be deluged by information…. struggle to find truth amid swirling currents of data and become ever more side-lined by waves of triviality”. How prescient.

Different people prioritise different sources. The old will perhaps adopt a different truth test than the young. ChatGPT and its equivalents are yet another source and hundreds of millions of people are unsurprisingly experimenting with them. They are now distributing their imperfect products for free, and so establishing a “user” base, as they seek to develop a product of sufficient quality. The aim must be to monetise the technology, again presumably via advertising at least or even subscription, and then again perhaps there will be personally targeted “stuff” the individual likes to hear. And so, a new generation of media drug will be there for consumption. The forest will be getting thicker and the undergrowth harder to navigate. How will we find the wood without new navigation tools?

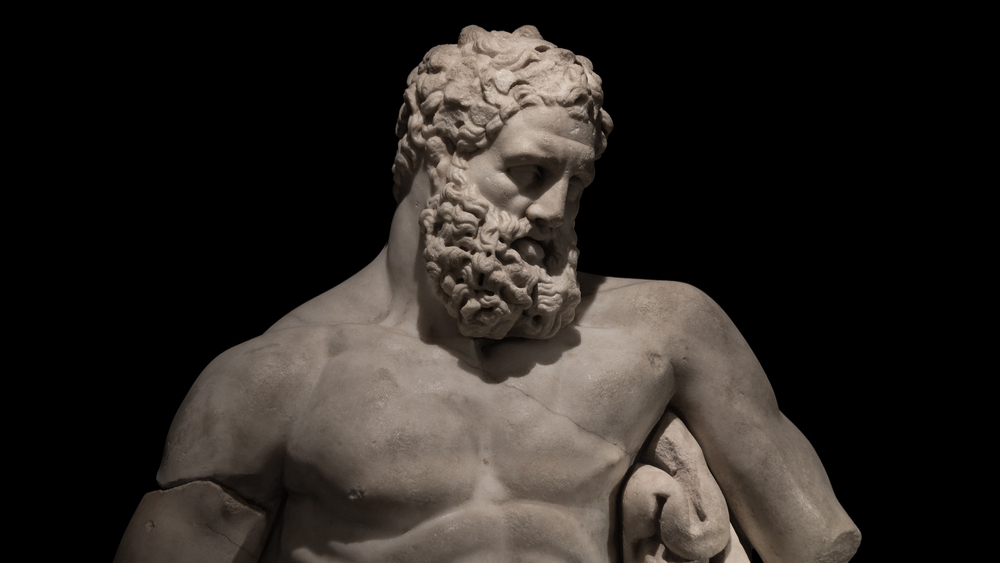

WHAT TO DO? A HERCULEAN CHALLENGE

The human mind can only absorb a fraction of what is available. Too much useless information poisons the important “stuff” and so much of what is pushed forward is trivia, let alone inaccurate. Finding the wood for the trees when it comes to information is a constant challenge that each person must grapple with, and perhaps will need training to do it. Is it perhaps imperative that we learn to focus on what is important, and instil a discipline of balance in usage of information sources available? Clicking excessively on numerous social media channels will perhaps produce just a cacophony of mush. It seems that a possible good discipline will be to be exceedingly wary of information which has no clear original identified source, and to assess possible sources for reliability, dismissing those where there are obvious questions as to motive. Each of us will have special subjects of interest and in those the real detail could be analysed. But for most topics it will be necessary to trust others to explain detail honestly and fairly. Trial and error may decide those.

This year Cambridge University students voted by a majority of those voting that only Vegan food should be available throughout the University. Hopefully, this will not be implemented as it would be an extreme authoritarian imposition of a restriction of choice for omnivore students. But perhaps it is relevant to ask who were the active sponsors of the resolution. Were they zealot vegans? Was there a balanced promulgation of the dangerous health hazards of veganism, without at least a proper diet of supplements of the essential nutrients naturally obtained from animal products? Was there a fair presentation, a balance of argument?

Then, in the Daily Telegraph editions in early March, highly judgemental journalism is focussing on leaked WhatsApp messages, involving Matt Hancock and officials and others about issues in the pandemic. The clear implication is to draw conclusions about decision making, and establish criticism. This is all presented out of context in the sense of there being no presentation of all other behind scenes thinking, conversations and meetings, or nuancing. WhatsApp chats, like the idiocy of much Twitter, is a dangerous source of evidence, and using it to convict a person risks grave kangaroo court mistakes and abusive assertions about failings to serve political purposes wrongly. There were enough appalling accusations without full evidence during the pandemic, with vociferous voices for lockdowns and closing of schools, or vice versa, which are now being contradicted in some spaces. The Daily Telegraph is prompting some rather self-serving “I told you so” commentaries of which we should be wary. Perhaps both this new witch hunt and the Cambridge students’ vote are lessons in wariness of allegations or decisions made or taken using incomplete information.

Maybe the most worrying feature of information overload and the plethora of sources is that regulation is always playing catch up. Lack of clear high standards consistently applied across platforms and a lack of true care about putting the public’s interests first among commercial suppliers and providers, and the technicians they engage to develop ever more exciting products, poses the biggest risk. Authorities need to provide health warnings and information to enable people to assess risk. As is so often the case, in discerning truth, it is the responsibility of the individual to make careful and moral choices, to reject the sources that might do harm, to be intelligent in what is to be believed, and apply balanced common sense. It may be important too to be willing to change one’s mind when what might once have been thought true and fairly presented turns out to be otherwise.

Each person must perhaps consider how best to prevent their capacity to think things through and reach informed reasonable judgements being seriously impaired by absorbing the wrong stuff and excessive irrelevant trivia.

P.S. Don’t look now but as the Financial Times of 4th March 2023 warns in an article by Camilla Cavendish, neurotechnology is on the march. “The emerging ability to track and decode what goes on in the human brain requires a serious conversation about how we use it.”